Illustration by optimizationtheory.com

[supsystic-social-sharing id=’1′]

Do you remember mid ’90s Internet? Probably not all of you do. Internet with no social platforms or Wikipedia, built by enthusiasts and garage companies.

Algorithm introduced back then had been one of the pillars of the most popular search engine in the world. Not so long ago, a key parameter to quick estimation of website’s potential, now missing. How to “survive” when it’s no longer available? We’ll try to describe metrics which can be treated as a “replacement”, show you how to interpret them and what you have to be careful about when using them.

A few words about Page Rank.

If you’re not interested in Page Rank itself, skip it. 🙂 We have put this here for educational purposes and to help you understand other authority estimation algorithms derived from Page Rank.

What is Page Rank?

Page Rank is an algorithm designed to estimate website’s authority taking links (as a form of quotation) into consideration.

This sort of methods was used to rate scientific publications before, Page Rank is a development of these techniques.

The presumption seems quite natural: website A increases website’s B authority by linking to it (considering it a valuable source). The more authority website A has, the more authority is being passed through link.

The probabilistic interpretation of Page Rank can be as follows: the likelihood that a person randomly clicking on links will arrive at any particular page. Which means, better linked websites have greater Page Rank score (especially when linked from other high Page Rank websites).

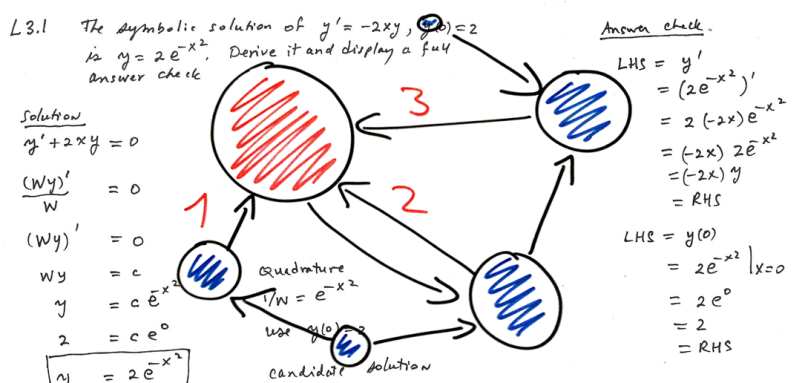

How is Page Rank computed?

In the example, you can see a small network of 10 websites (red nodes). Graph edges (arrows) stand for links. The greater the diameter of a node, the higher the Page Rank value (the differences have been exaggerated).

INITIALIZATION: Each node is assigned the same initial Page Rank value.

1-5: ALGORITHM ITERATIONS. During each iteration, Page Rank value for a node is updated considering the power of linking neighbours (divided by the number of websites the node is linking to). Additionally, the passed authority is lowered by being multiplied by a damping factor (originally: 0.85) as linking power cannot be passed infinitely (the average Internet user will stop following the links sooner or later).

Algorithm stops when the difference between two iterations goes below the desired error level (convergence) as you can see below:

History

History

1996 – Page Rank was being developed by Larry Page and Sergey Brin – during studies at Stanford University.

1998 – the paper describing Page Rank was published.

1998 – the algorithm has been patented (The patent belongs to Stanford University, the university received 1.8 million shares of Google in exchange for use of the patent).

2005 – rel=”nofollow” was introduced – to mark links not passing Page Rank.

2009 – Page Rank values were removed from Webmaster Tools (Search Console)

Nov 2013 – the last Page Rank update available for public viewing

15.04.2016 – Google stops disclosing any PR value (which was already outdated on that point)

Is Page Rank completely “gone”?

Page Rank values are no long available – that’s all we know for sure. Does it mean, the algorithm has been completely eliminated by Google? Almost for sure, no. The linking power is still one of the most crucial ranking factors and Google is likely to employ algorithms estimating authority passed by links, probably Page Rank of algorithms derived from Page Rank.

Ok, Page Rank is no longer available for us. What’s left?

There is a wide range of parameters which can help us. It is always good to understand their meaning and interpret their values correctly.

Some of them are pretty intuitive (being just a number, e.g. number of links), others have been normalised into 0-100 or 0-10 range. This usually means, we are dealing with logarithmic scale, which means that domain having parameter value of 50 is way stronger than the one ranking at 40 (not “by 25%” 🙂 ).

Google indexation

Resource being indexed in Google is one of the most basic quality estimation criteria. While whole domain not being

indexed in Google search results might terminate the analysis at this point, URL-level indexation might be a good quality indicator. By indexing a subpage, Google suggests, it

might have some valuable content for the users.

The estimated number of domain’s subpages indexed in Google can be checked with the following query:

site:domainname.com

You may check page-level indexation as well:

info:http://domainname.com/subpage

or check Google cache content for this page:

cache:http://domainname.com/subpage

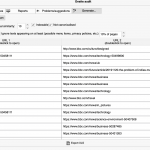

For bulk-checking the indexation, it is advised to use some tools to automate the process.

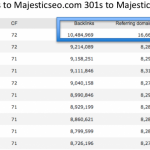

Majestic SEO

The most important Majestic params:

BL – number of links to URL/domain

RD (referring domains) – number of unique linking domains (domain which has 10 links from one website will have BL=10 i RD=1)

Referring IPs – number of unique linking IP addresses

Referring Subnets (Referring C-subnets) – if a website has two links from domains on IP addresses with the only difference in last octet, such as: 1.2.3.4 and 1.2.3.5, the website has links from just one C-subnet.

CF (Citation Flow) – reflects the power of links referring to a subpage/domain. Range: 0 – 100.

TF (Trust Flow) – reflects trust passed through links leading to a website. The authors have manually reviewed and selected some top-quality websites. The closer your website is linked to this set, the higher the Trust Flow value. Range: 0-100.

Majestic gathers links and parameters in two indexes:

Majestic Fresh – based on links active within the previous 3 months (mostly alive).

Majestic Historic – based on any discovered links (even a few years old), thus larger but containing links already lost/deleted.

Quick insight into these params might help you come with some probable conclusions:

- large number of backlinks (BL), but very few referring domains (RD) – possible SITE-WIDE links (on each subpage) or links from websites indexing the same content on many subpages (such as multiple tags or search results)

- low ratio of referring IPs / subnets to referring domains (RD) – links might come from PBNs or SEO-hosting

- low Trust Flow value compared to Citation Flow – low quality links risk

- a lot less links (many times less) in fresh, than historic index – possible heavy link building (blasts?) history

Some of the SEOs like rely on a metric derived from MJ params: PowerTrust = TF * CF. Intuitively, high PowerTrust might mean strong linking power together with good trust – very desirable situation.

Ahrefs params

Backlinks (BL), Referring Domains (RD), Referring IPs, Referring Subnets – interpretation is the same as for Majestic.

URL Rating – Overall estimation of links power and quality. Range: 0-100.

Domain Rating – same as URL Rating, but for the whole domain. Range: 0-100.

Sadly, Ahrefs does not disclose almost anything about how these factors are calculated. But they say, these factors correlate with visibility metrics well instead. 😉

Selection of Moz params

Domain Authority – general estimation of “how well will domain perform in search results?”. DA combines other Moz metrics into one.

Domain Authority – general estimation of “how well will domain perform in search results?”. DA combines other Moz metrics into one.

Range: 0-100.

Important notice: Domain Authority of a subpage will match top-level domain’s DA! When checking DA for a wordpress.com blog, it will return DA > 90, which does not mean that every wordpress.com powered blog is going to conquer the search results. 😉

Page Authority – page-level equivalent of Domain Authority.

MozRank – reflects link’s “popularity” considering the number and quality of links leading to the URL. Both internal and external links are taken into consideration. External MozRank is the equivalent based on external links only. MozRank has a range of 0-10.

Alexa rank

Alexa rank was initially based on data gathered from Alexa toolbar users and browser plugins. Alexa rank should reflect real user traffic to the website. Certainly, there might be some concerns such as:

Alexa rank was initially based on data gathered from Alexa toolbar users and browser plugins. Alexa rank should reflect real user traffic to the website. Certainly, there might be some concerns such as:

– different toolbar popularity in different countries

– some types of users will install it and others won’t

– methodology leaves some space for manipulation

Since 2008 Alexa takes into consideration other data sources than collected by toolbar users as well.

The lower the ranking, the better. The most popular website by Alexa has a ranking of 1.

Notes: Alexa rank for a subdomain is usually the same. There are some exceptions such as blogs on popular blogging platforms, e.g. blogspot.com

Popularity in social networks

Popularity in social networks

The number of social media links may not be a direct ranking factor, but it does not mean this parameter is worthless yet. We can come with some conclusions such as:

– website popular in social networks can have some serious traffic

– website popular in social networks can have links from other sources as well

– website popular in social networks can have some viral content or… nice budget for social media promotion 😉

Tools such as SharedCount or Search Auditor can help you to check the number of shares (of page or domain’s homepage) in popular social services such as: Facebook, Google+, Twitter (recently limited), LinkedIn, Pinterest or StumbleUpon.

Domain’s age

Domain’s age can be considered in at least two ways:

– WHOIS creation date

– Domains age by web.archive.org – the moment of fist indexation by popular Internet archive. web.archive.org allows you to check historical content of a domain as well (which can help you when you’re thinking of buying a domain).

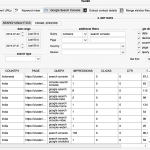

Search engine visibility

There are a lot of tools monitoring search engine results and building visibility indexes. Before starting the actual analysis, it is worth checking if your tool monitors the market you’re interested in. Some global tools might not analyse your preferred Google version or provide very shallow analysis of your country/language.

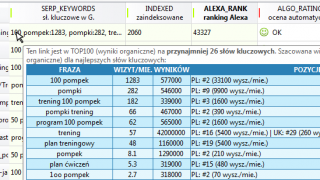

In this example, we describe visibility factors by Clusteric Search Auditor, but most of them can be obtained from other tools as well.

DOMAIN_VISIBILITY – estimated number of organic visits to domain (monthly).

SERP_VISIBILITY – estimated number of organic visits to URL (monthly).

SERP_TOPIC – Topics the website is visible for in search results.

SERP_SUBTOPIC – Subtopics the website is visible for in search results.

SERP_KEYWORDS – Exact keywords the URL is visible for and their potential (position, number of results, number of searches).

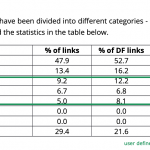

Which parameter can replace Page Rank as a general potential estimator?

“That depends”. 🙂

When talking about the algorithm concept…

…there are some parameters applying the same approach as Page Rank (authority estimation based on links). However, it is worth noting, that:

– These are the estimations provided by third parties and not Google itself.

– The general concept of Page Rank is well known, but the specific implementation used in Google is well protected.

– Nowadays, there are more than 200 ranking factors (some sources say that 400 or even more) taken into consideration by a search engine. Maybe there is no more place for a one, dominant parameter? On the other hand, we’ve got RankBrain and growing importance of AI in selecting the fittest pages for search results. However, it is unlikely that Google will share the details with us in a form of a new metric.

When talking about the most universal parameter…

…(in our opinion), if you’re going to concentrate on a small subset of parameters, visibility factors may be the best choice.

Possible cons:

– Visibility metrics rely on the quality estimation provided by Google. All the other factors are more or less a part of it. If a website ranks well in Google, then it should have some authority. And this authority can be passed through links further on.

– Well visible pages does not only mean you can use them for link building. Using visibility metrics, you can easily select locations with some well-targeted organic traffic, which means possible customers are visiting these websites.

– Using locations ranking for topics of your interest for link building seems more “natural”.

– Visibility metrics combine all of other metrics – both off-site and on-site, which means you usually don’t need to investigate into on-site factors on your own if Google already likes it. 😉

Potential drawbacks:

– While it is almost certain, that a website with some visibility can pass it’s authority, you cannot be certain that website with no visibility has little or no authority to pass. It can happen when a page is well linked, but has no optimized content (or no content at all) or website’s visibility has been affected by Google penalty.

– Visibility metrics are based on search engine monitoring for a selection of keywords, which means these parameters can be lower for pages which have a huge share of long-tail traffic or are visible for a lot of minor keywords.

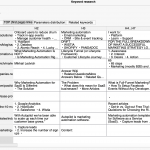

Summary of described factors. 🙂

| Name | Interpretation | Possible values | Notes |

|---|---|---|---|

| Parameters evaluated by Google | |||

| Domain-level indexation | One of the most basic "status" factors. No indexation = no visibility and no organic traffic. | The number of indexed subpages. No indexation might mean a critical issue to be resolved (e.g. Google ban). | |

| Subpage-level indexation | Is page indexed in Google? | No indexation (if page is not new) might mean, the page has no real value for a search engine. | |

| Page Rank | Authority based on "voting" by links. | n/a (for pages with no Page Rank), 0-10 | Not disclosed to the public after April 2016. No visible updates after November 2013. |

| Majestic SEO params | |||

| BL (backlinks) | Number of links referring to URL/domain. | ||

| RD (referring domains) | Number of unique domains linking to URL/domain. | ||

| Referring IPs | Number of unique referring IPs. | ||

| Referring Subnets | Number of referring C-subnets. | ||

| CF (Citation Flow) | Estimates the power of links. | 0 - 100 | |

| TF (Trust Flow) | Estimates the trust passed through links. | 0 - 100 | |

| Ahrefs params | |||

| BL (backlinks) | Number of links referring to URL/domain. | ||

| RD (referring domains) | Number of unique domains linking to URL/domain. | ||

| Referring IPs | Number of unique referring IPs. | ||

| Referring Subnets | Number of referring C-subnets. | ||

| URL Rating | Accounts for the number and quality of links. | 0-100 | |

| Domain Rating | Accounts for the number and quality of links - on a domain level. | 0-100 | |

| Moz params | |||

| Domain Authority | General indicator of "how well a domain will perform in search results?". Domain Authority combines other Moz metrics into one. | 0-100 | DA for a subdomain will be the same as for top domain! |

| Page Authority | As above, but page-level. | 0-100 | |

| MozRank | Accounts for the number and quality of links. Both external and internal! | 0-10 | External MozRank takes only external links into consideration. |

| Alexa params | |||

| Alexa rank | Based on website's popularity (especially real user trafiic). | 1=the most popular. The higher the ranking, the less popular website is. | Some subdomains have its' own Alexa rank, some only have top-level domain rank. |

| Alexa rank (local) | Ranking alexa restricted to a given country. | 1=the most popular. The higher the ranking, the less popular website is. | Some subdomains have its' own Alexa rank, some only have top-level domain rank. |

| Domain's age | |||

| WHOIS | The moment of domain registration. | date | |

| web.archive.org | The moment of first indexation by the most popular Internet archive. | date | web.archive.org can be used to investigate into domain's historic content (e.g. before buying the domain) |

| Popularity in social platforms | |||

| The number of likes/shares (of home page or subpage) in Facebooku, Twitterze, Google+, LinkedIn, Pintereście, StumbleUpon and other social networks. | Social popularity indicator. Does not have to mean that website has some SEO power, but it is probable as it can have links from sources other than social media and some real user traffic as well. | ||

| Visibility factors (by CLUSTERIC Search Auditor) | |||

| DOMAIN_VISIBILITY | Estimation of monthly visits (to domain) from organic search results. | Covers subdomains visibility as well, which means myveryownblog.blogspot.com is included in visibility score for blogspot.com | |

| SERP_VISIBILITY | Estimation of monthly visits (to URL) from organic search results. | Good estimation of URL's quality and ability to rank in search engine. | |

| SERP_TOPIC | Search engine visibility - by topic. | ||

| SERP_SUBTOPIC | Search engine visibility - by subtopic. | ||

| SERP_KEYWORDS | TOP keywords URL is visible for and their potential (position, number of searches, number of results). | ||

Some notes on how to interpret the parameters.

It is good to fully understand what you are relying on – we hope, our suggestions will be helpful – especially for the beginners.

Almost no parameters and indexes are calculated in real-time.

Todays Internet means HUGE amounts of data. Most of the metrics are based on information gathered by web crawlers/robots (pieces of software run on servers) designed by web-analytics companies. They visit websites and certainly need time, to find and collect new content. Some of the resources will never be visited by robots. A lot of time is needed not only to collect the data, but to process it and reduce to a useful form as well – especially as we are talking about data gathered by thousands of servers. That being said, the value of a parameter does not refer to the state “here and now”, but to the moment of data collection. Some changes might be reflected in metrics/indexes after a few days, others after a few months.

You want to build your visibility in Google, not Clusteric, Majestic, Ahrefs or Moz.

Google has their own (usually well protected) algorithms and own databases. Other companies design their own algorithms and collect data on their own, which means their metrics represent third-parties’ point of view and are more or less a suggestion.

No one has complete knowledge.

No company is capable of indexing the whole Internet. A subset of websites to be analysed is selected by:

– probability factors (NY Times homepage is more likely to disclose something new and valuable than a millionth profile in a deserted message board)

– cutting down on costs and resources – some pages are just not worth being indexed as they contain very little to no valuable content

– “technical” issues, such as web crawlers being blocked on purpose (to save resources or hide PBNs from competitors)

Understand which resource the parameter value refers to.

Great metrics of top domain or homepage? That’s nice, but think about the value of the subpage containing links to your website. Is it popular / how far (how many clicks) from homepage / are there any links pointing to it? Or maybe it has no real value and even crawlers are not interested in visiting it?

Params can have different values for:

– domain

– the same domain with “www.” prefix

– subdomain/top domain

– specific URL or more than one URL leading to the same content (!)

Want some links from a blog with more than 90/100 Domain Authority? No problem! Just create it. idonotevenexist.wordpress.com – this blog does not even exist yet, but already has DA=95.52. It is just Domain Authority refers to top-level domain and will be the same for subdomains. Does it mean, the linking power of said blog will be the same as for wordpress.com? Certainly not.

Remember, the off-site factors of URL aren’t everything.

There are more pieces in this puzzle:

– On-site factors (well linked website can still be a garbage) – more about on-site analysis soon. 🙂

– You have to consider every of your links as a part of your link profile (as a whole), which in most cases should be diverse. Google knows how to detect suspiciously unnatural patterns in your link profile. Consistent linking with dofollow links and the very same anchor text (especially money keyword) might lead into troubles – even when selecting the top quality link building locations.

Summary

Page Rank is no longer available for public viewing, and what is more, since 2013 we only had an insight into archival values of this param. Retiring Page Rank made some place for other metrics and quality criteria. Some of the alternatives share the same concept (authority estimated by links treated as a “votes”), others try to reflect real user traffic or visibility in Google. From our point of view, visibility metrics seem to have great potential, but it is up to you, which params you want to employ in your everyday work – as long as you understand their meaning and remember, they are no more than some kind of heuristic with more of less limitations.

If you want to share your approach to website’s quality estimation or just want to add some metrics to our list – please let us know! 🙂

Graphics by…:

Featured image by optimizationtheory.com

Image courtesy of iosphere at FreeDigitalPhotos.net

2 Comments

There is no official "page-rank" replacement, but we have got a lot of different factors to rely on them.

SEO is now the thorn from on-line advertising specialists aspect.

The truth is that organizations that are not using specialist internet marketing services to aid

them with their own marketing have included the community in their internet sites and promotion, meaning that they're probably being penalized directly now from the search engines.

Search engine optimization is now the thorn in on-line advertising specialists aspect. The truth is that organizations who are not using

expert internet marketing agencies to aid them together with their promotion have not all integrated regional in their internet sites and promotion, which means they're most likely currently getting penalized right now from the search engines.

Leave A Comment